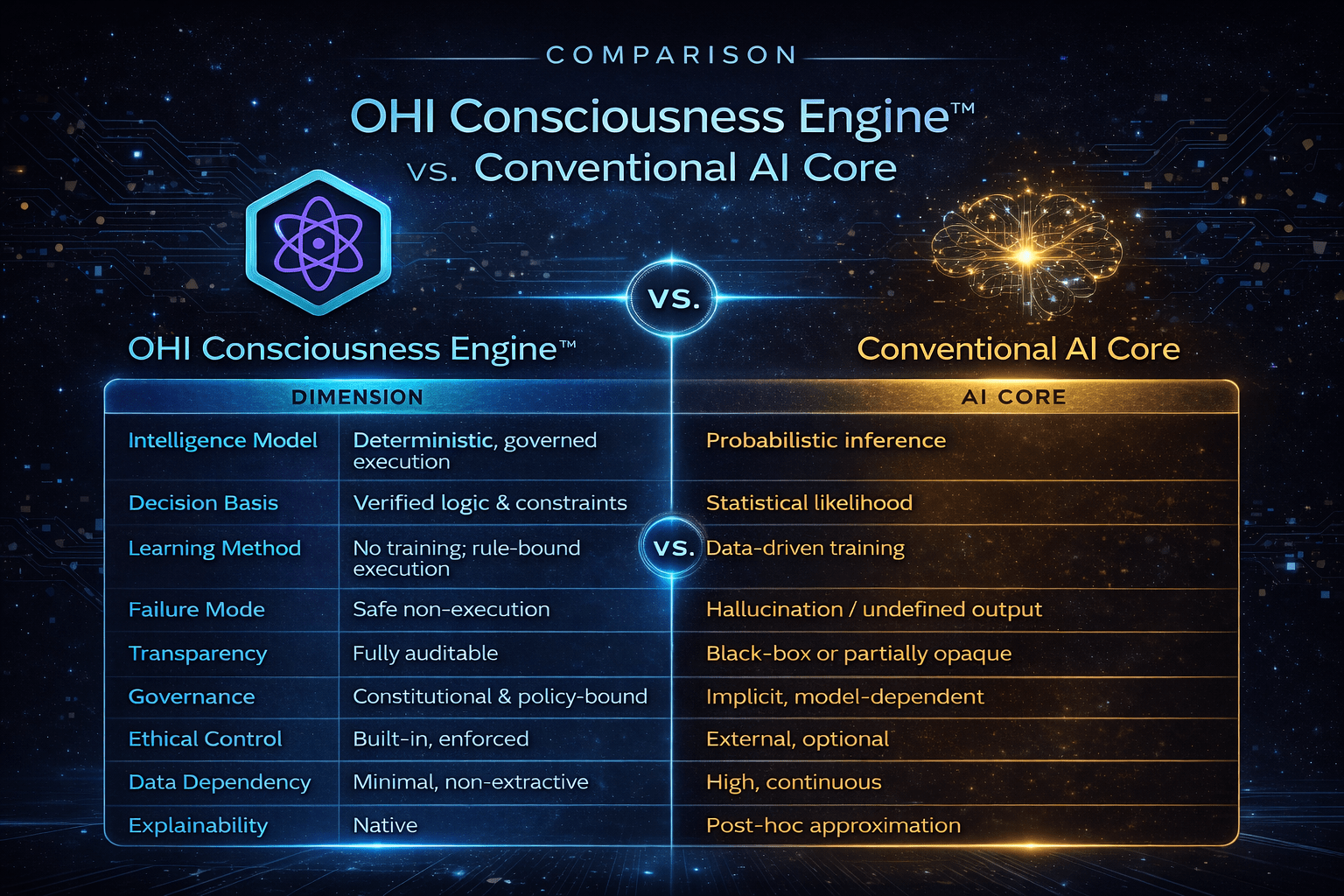

Comparison: OHI Consciousness Engine™ vs. Conventional AI Core

Overview

Modern artificial intelligence cores are optimized for prediction, pattern recognition, and statistical approximation. While effective in narrow domains, these systems introduce unacceptable risks in safety-critical, financial, legal, and sovereign environments due to their probabilistic nature, opacity, and lack of governance.

The OHI Consciousness Engine™ was architected to address these limitations by replacing probabilistic inference with deterministic, consciousness-aligned, and constitutionally governed intelligence execution.

This section outlines the fundamental differences.

Core Architectural Differences

| Dimension | OHI Consciousness Engine™ | Conventional AI Core |

|---|---|---|

| Intelligence Model | Deterministic, governed execution | Probabilistic inference |

| Decision Basis | Verified logic & constraints | Statistical likelihood |

| Learning Method | No training; rule-bound execution | Data-driven training |

| Failure Mode | Safe non-execution | Hallucination / undefined output |

| Transparency | Fully auditable | Black-box or partially opaque |

| Governance | Constitutional & policy-bound | Implicit, model-dependent |

| Ethical Control | Built-in, enforced | External, optional |

| Data Dependency | Minimal, non-extractive | High, continuous |

| Explainability | Native | Post-hoc approximation |

Decision Integrity

AI Core

AI systems determine outcomes by maximizing probability across learned patterns. This approach:

- Cannot guarantee correctness

- Produces plausible but false outputs

- Degrades under edge conditions

- Cannot explain why a decision was made

In safety-critical environments, this leads to unpredictable behavior.

OHI Consciousness Engine™

The OHI engine executes only decisions that satisfy:

- Pre-defined logical constraints

- Contextual coherence checks

- Ethical and constitutional boundaries

- Verifiable outcome conditions

If a decision cannot be verified, it is not executed.

Hallucination Risk

| Scenario | AI Core Behavior | OHI Engine Behavior |

|---|---|---|

| Insufficient data | Fabricates output | Rejects execution |

| Conflicting signals | Blends probabilities | Enforces constraint hierarchy |

| Novel situation | Guesses | Escalates or pauses |

| Adversarial input | Vulnerable | Deterministically filtered |

Key distinction:

AI attempts to answer.

OHI™ prioritizes correctness over response.

Governance & Control

AI Core

- Governance is indirect

- Behavior emerges from training data

- Policy enforcement is external

- Control degrades as models scale

OHI Consciousness Engine™

- Governance is intrinsic

- Policies are executable constraints

- Authority is explicit and inspectable

- Scale increases stability, not risk

This enables deployment in environments where legal, financial, or life-critical accountability is mandatory.

Data Ethics & Sovereignty

| Aspect | OHI Consciousness Engine™ | AI Core |

|---|---|---|

| Data collection | Minimal & consent-bound | Continuous & expansive |

| Model improvement | Not data-dependent | Requires ongoing ingestion |

| Surveillance risk | None by design | Structural |

| Data monetization | Prohibited | Often intrinsic |

OHI™ systems are designed to function without data exploitation.

Operational Domains

AI Core Is Suitable For

- Content generation

- Pattern recognition

- Recommendation systems

- Non-critical automation

OHI Consciousness Engine™ Is Required For

- Transportation safety systems

- Financial governance

- Legal & constitutional enforcement

- Identity systems

- Infrastructure control

- Sovereign or regulated environments

Failure Handling

AI Core:

Failure manifests as incorrect output presented with confidence.

OHI Consciousness Engine™:

Failure manifests as controlled non-execution with traceable reasoning.

This distinction is critical in systems where silence is safer than error.

Strategic Implication

The OHI Consciousness Engine™ is not a competitive alternative to AI cores—it is a categorical successor designed for domains where AI’s probabilistic nature is a liability rather than an advantage.

It enables the transition:

- From prediction → verification

- From inference → governance

- From opaque automation → accountable intelligence

Summary

Artificial intelligence cores optimize for likelihood.

The OHI Consciousness Engine™ optimizes for correctness, safety, and sovereignty.

AI answers questions.

OHI™ governs decisions.

This difference defines the boundary between automation and intelligence suitable for civilization-scale responsibility.

There are no comments